6 Virtual Memory

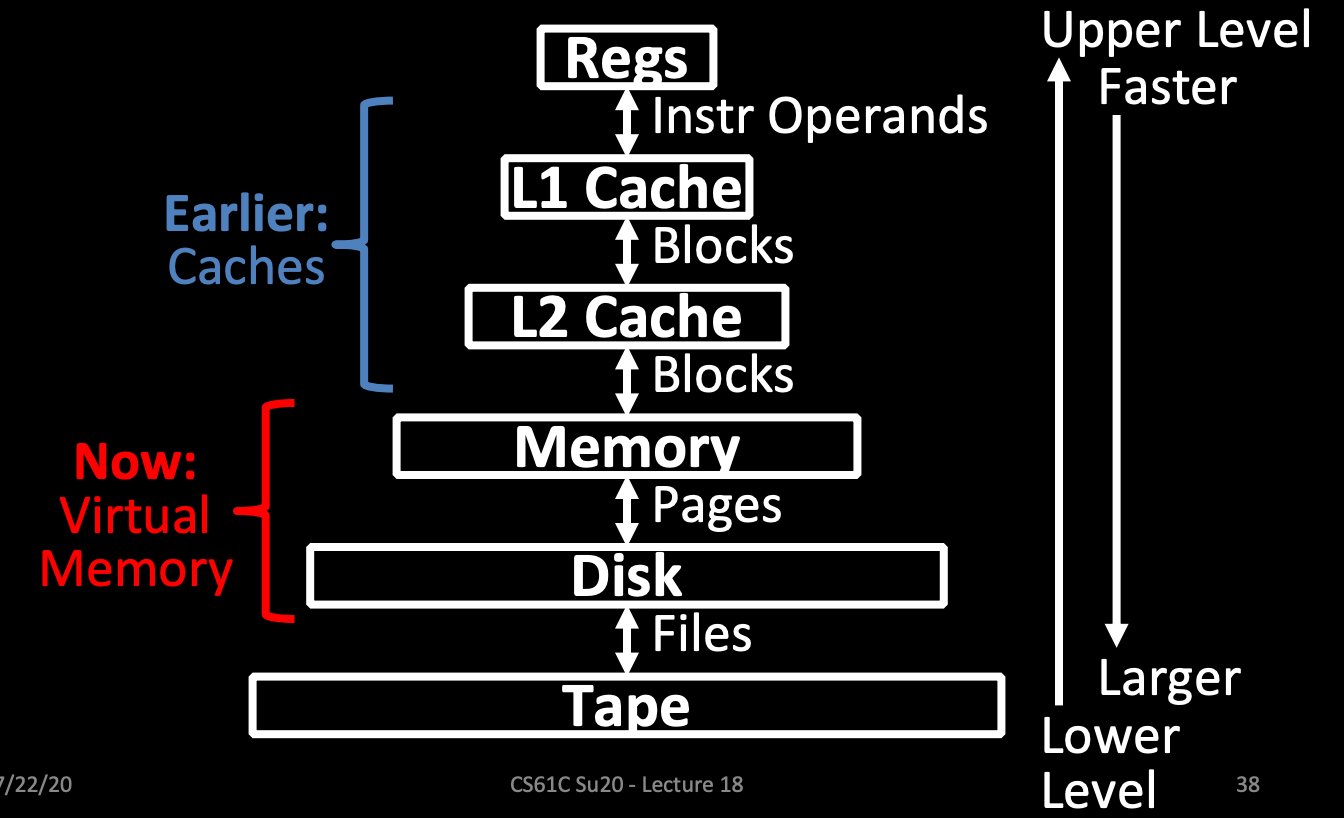

Memory Basic

Memory Multiplexing

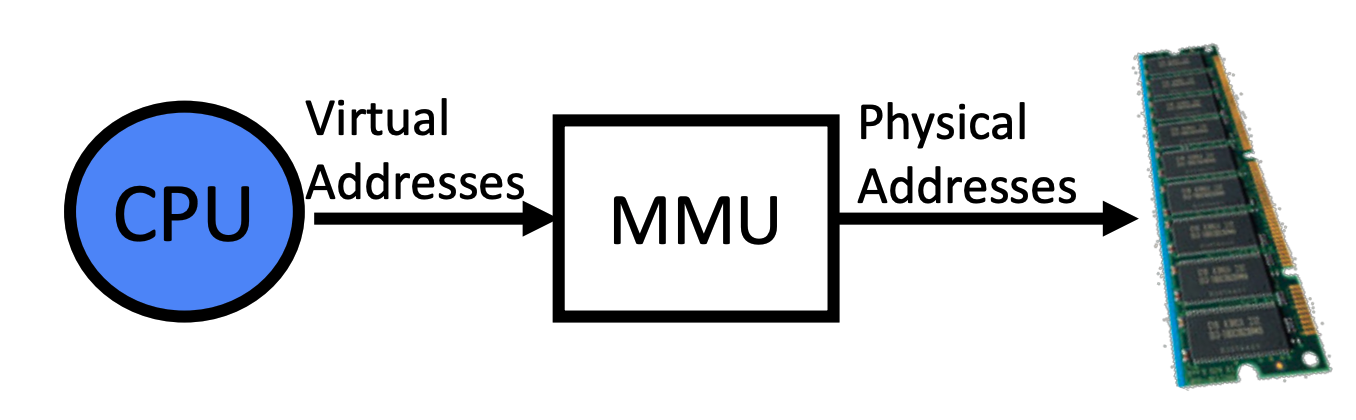

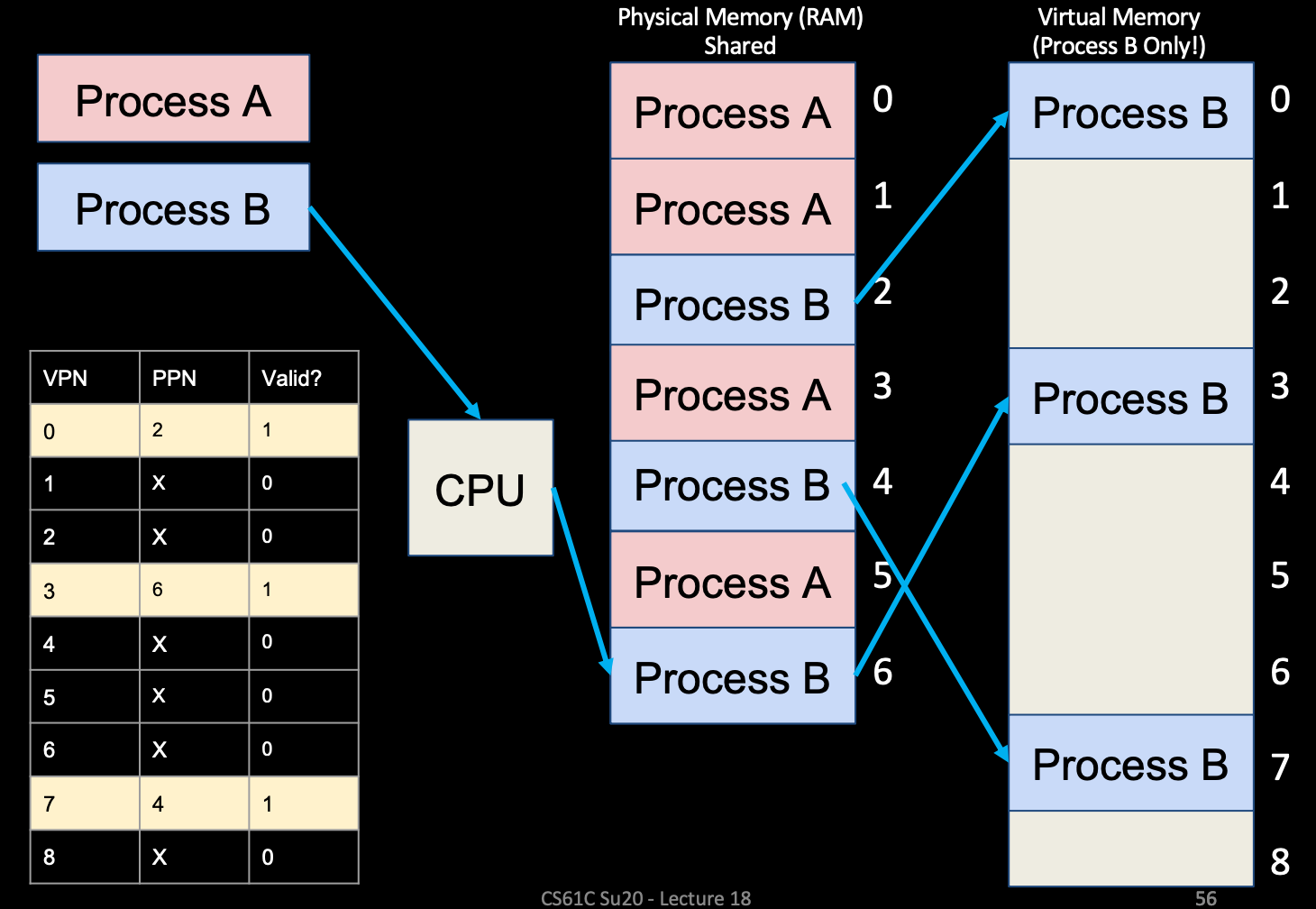

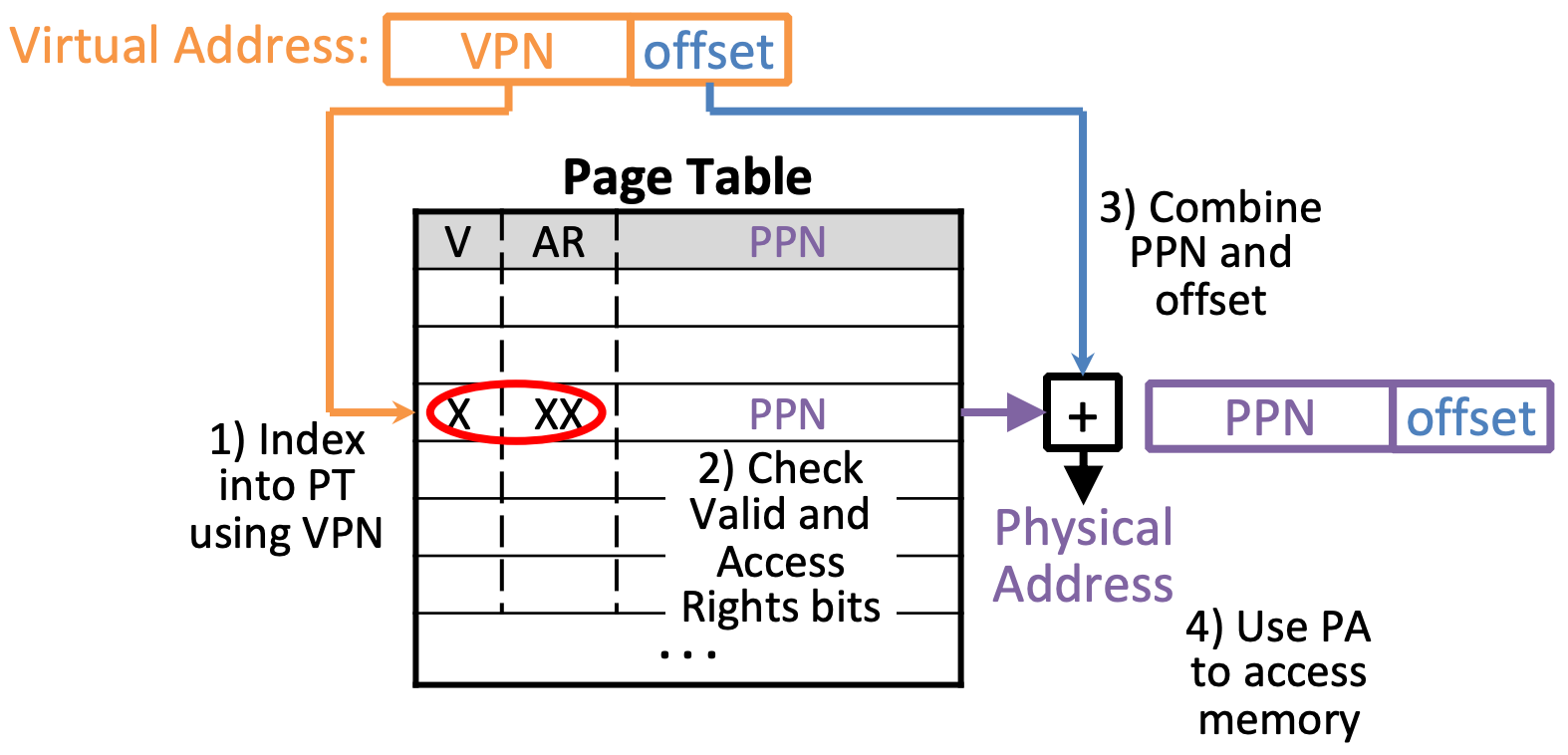

MMU(Memory Management Unit) converts virtual to physical

Different Processes/Threads share the same hardware

- Need to multiplex CPU (Scheduling)

- Need to multiplex use of Memory (Virtual Memory)

Important Aspects of Memory Multiplexing

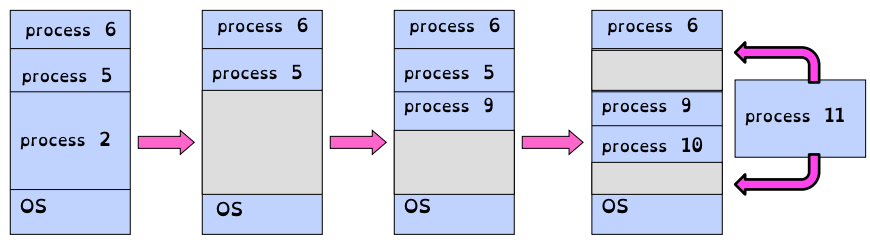

- Multiplexing: Allow multiple processes to simultaneously occupy memory

- Protection: Prevent access to private memory of other processes

- Translation: Give each program the illusion that it has its own private address space, and then translate accesses from virtual to physical

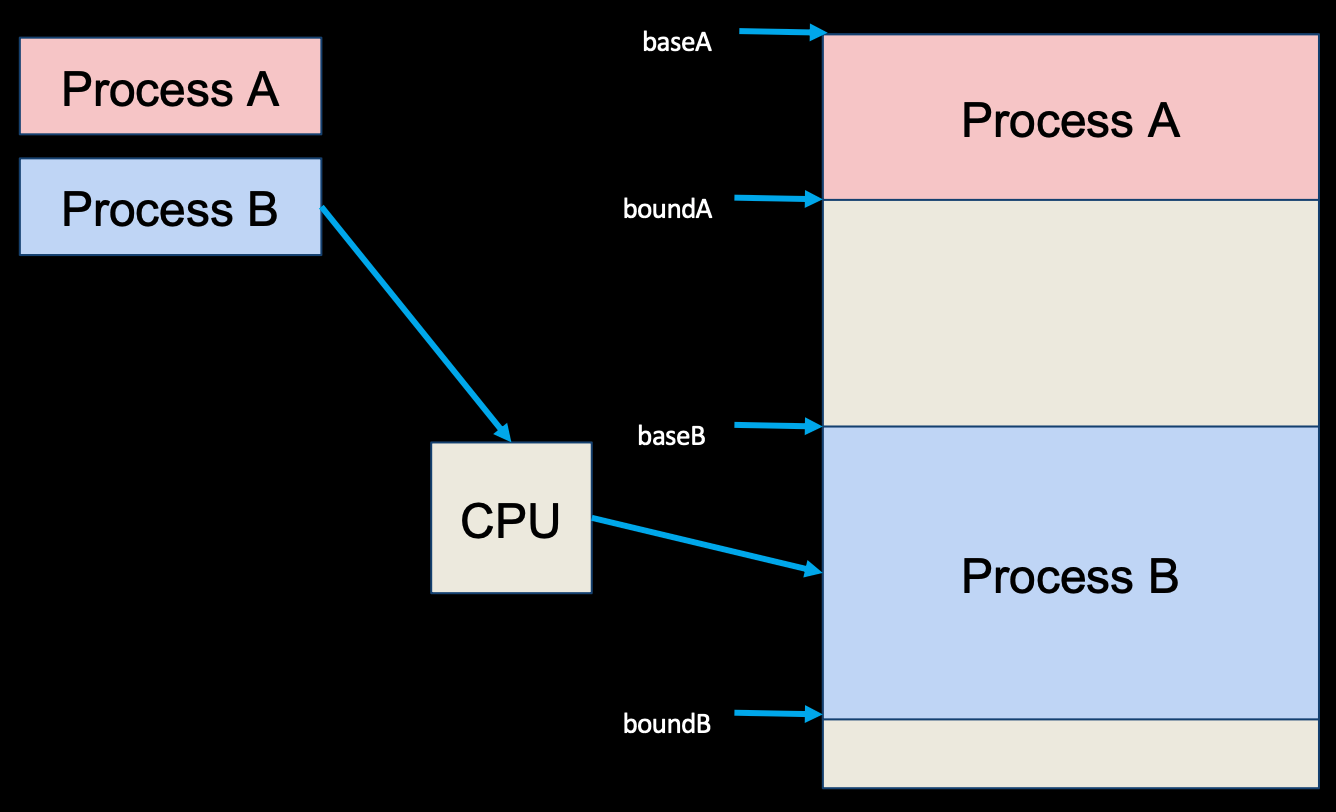

Base & Bound Machine

Problems

May need more space than segment allows

External Fragmentation: Free chunks between allocated regions

Internal Fragmentation: Space inside allocated address space may not be fully used

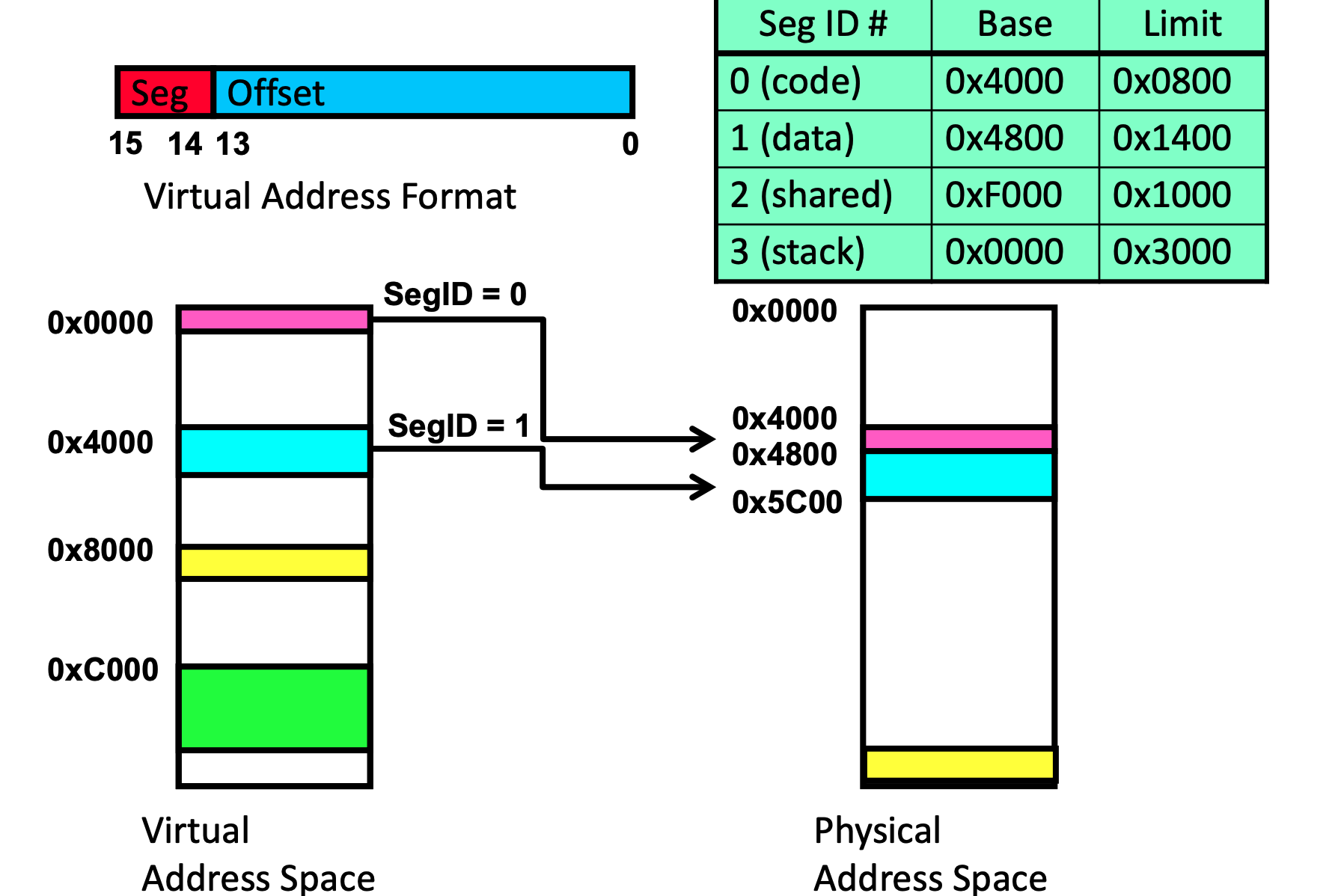

Simple Segmentation

if I have 16 bit address with 4 segments (code, data, stack, heap), use 2 segment bits

Pros: Minimize internal fragmentation

Cons: Still External fragmentation

Address Translation

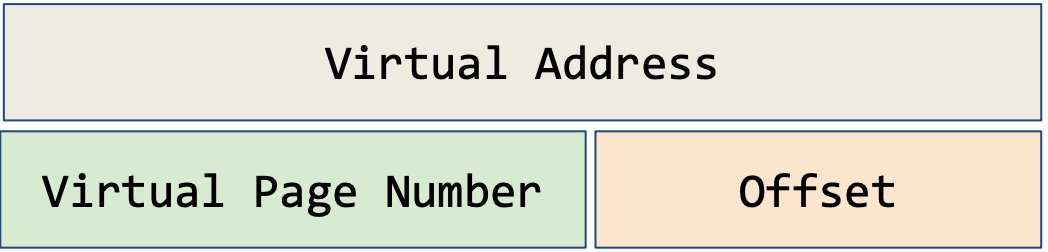

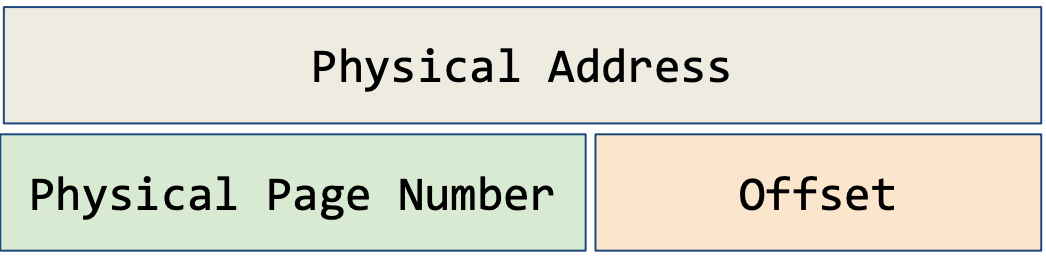

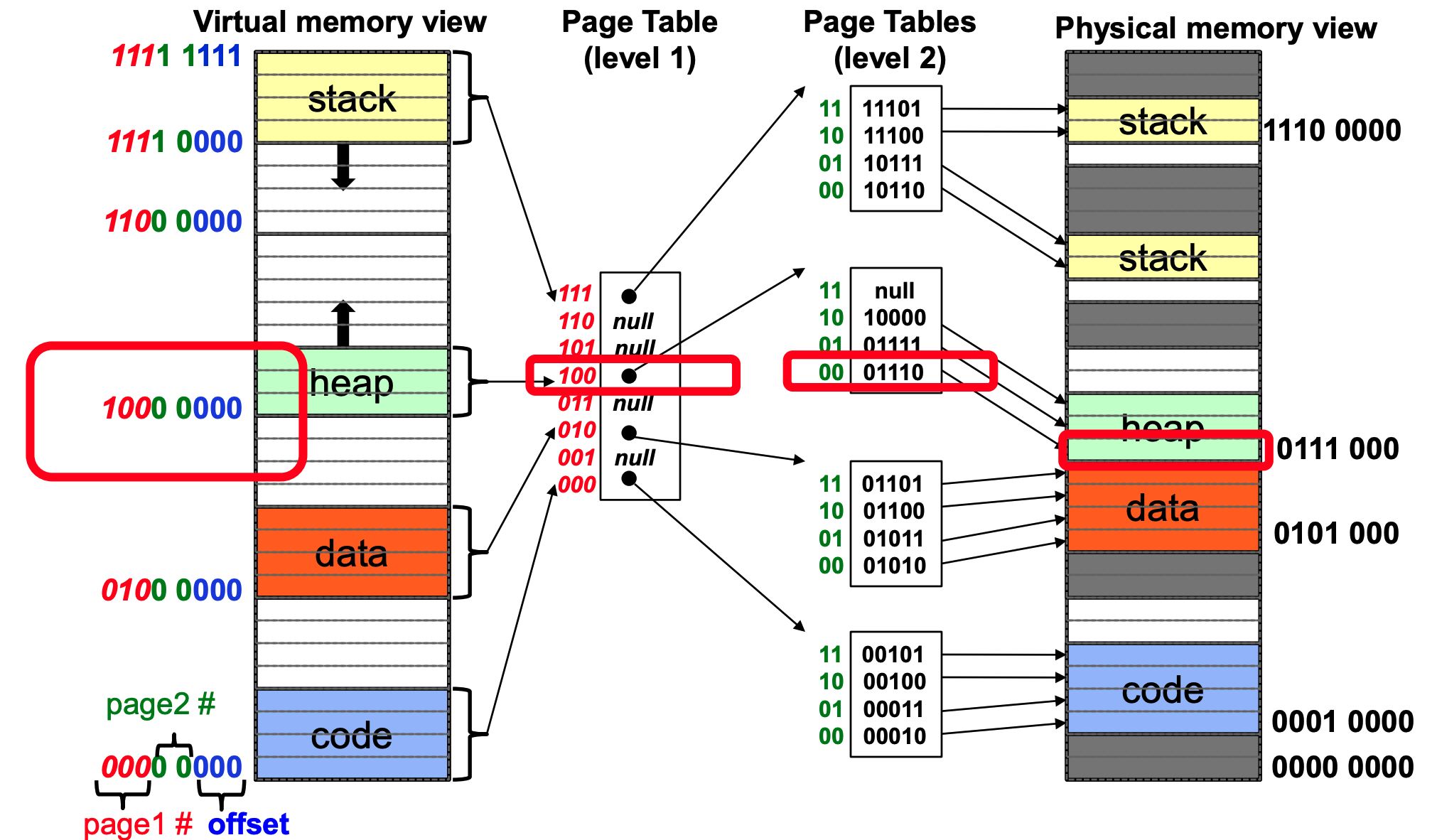

Simple Paging

Instead of having segments of various sizes, divide physical memory and virtual memory into equal units called pages

- Pages are the same size in both virtual and physical memory, and RAM is an integer multiple of pages

- Avoids internal fragmentation: Each program has access to one or more pages(do not have to be contiguous)

Virtual/Physical addresses can be broken into page number + offset(much likeT/I/O)

- Memory Size = Bytes

- Page size Bytes = Bytes

- VPN/PPN = log_2(# of pages) = log_2(Memory Size/page size)

Virtual addresses: Divided into pages indexed by virtual page number(VPN)

Physical addresses: Divided into pages indexed by phisical page number(PPN) or frame number

Example

Page Table Entry

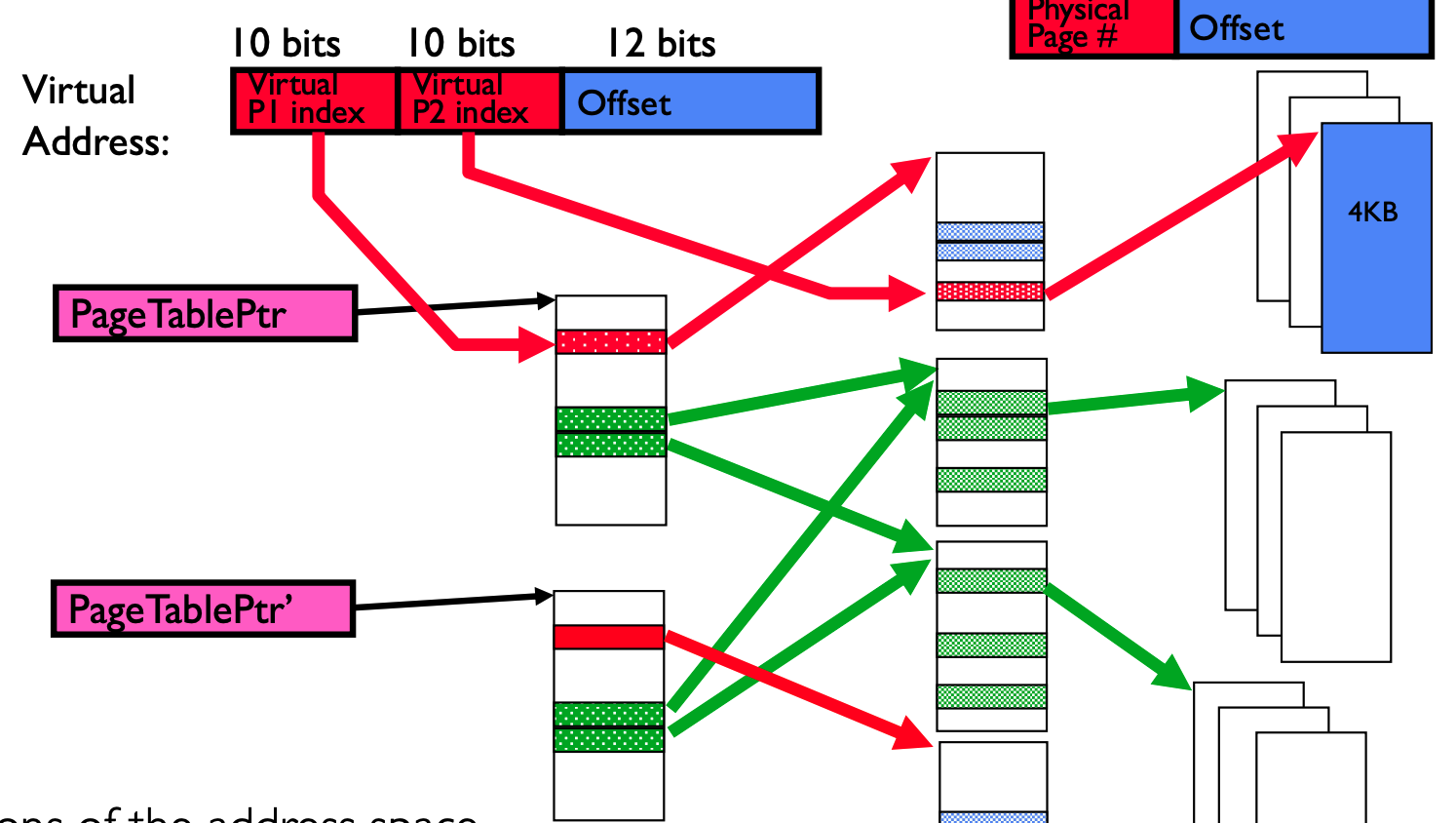

Each program has a page table, whose address store in PTBR(Page Table Base Register)

PTBR value saved/restored in PCB on context switch

- Dirty bit: Page has been modified recently

- Valid bit

- 1: virtual page is in physical memory

- 0: OS needs to fetch page from disk(Page Fault)

- Permission bits: Access Rights checked on every access to see if allowed (else protection fault)

- Read, Write, Executable

- PPN

Example:

If we ignore the valid and permission bits, assume that page table entry only contains PPN

Number of pages needed by Page table =

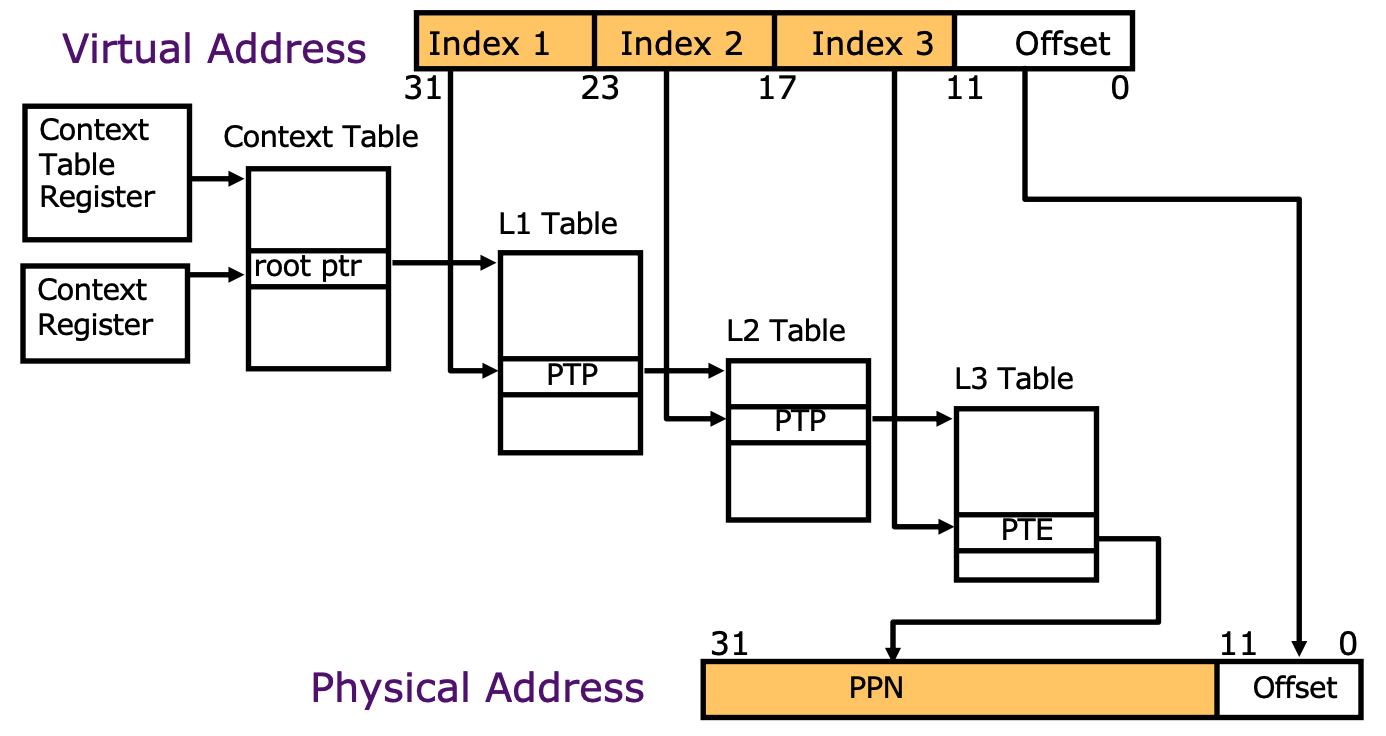

Problems

32 bit processor, 12 bit offset-> 4KB page size

Memory = * 4 bytes(20 + valid bit + permission bits) = 4 MB

64 bit processor, 12 bit offset

Memory = * 8 bytes(52 + ...) = ridiculous!

Problems:

- can be really large (span multiple pages), and sparsely populated

- have to load the entire page table(may only need sections)

Multi-Level Paging

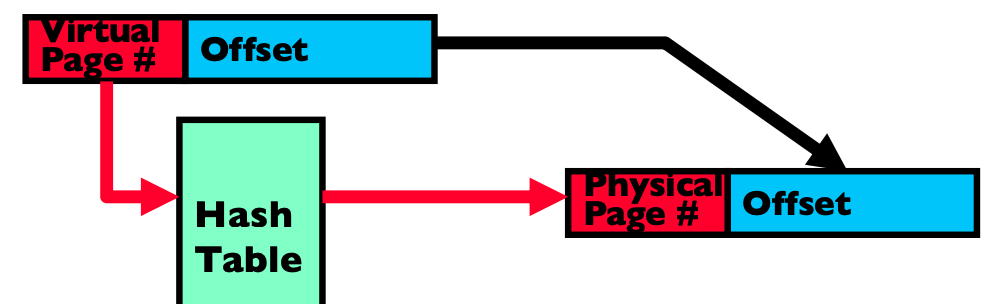

Inverted Page Table

Physical memory < Virtual memory: Much of process space may be out on disk or not in use

use a hash table

- Size Directly related to amount of physical memory

- Very attractive option for 64-bit address spaces: PowerPC, UltraSPARC, IA64

Cons

- Complexity of managing hash chains: Often in hardware!

- Poor cache locality of page table

Address Translation Comparison

| Advantages | Disadvantages | |

|---|---|---|

| Simple Segmentation | Fast context switching (segment map maintained by CPU) | External fragmentation |

| Simple Paging | 1.No external fragmentation 2.Fast and easy allocation | 1.Large table size(~ virtual memory) 2.Internal fragmentation |

| Multi-Level Paging | 1.Table size ~ # of pages in virtual memory 2.Fast and easy allocation | Multiple memory references per page access |

| Inverted Page Table | Table size ~ # of pages in physical memory | 1.Hash function more complex 2.No cache locality of page table |

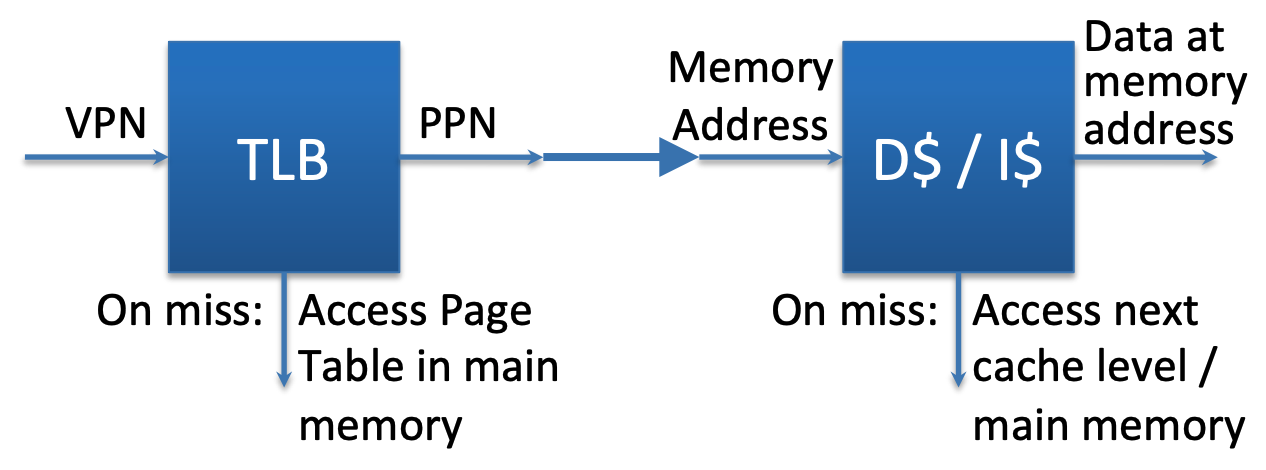

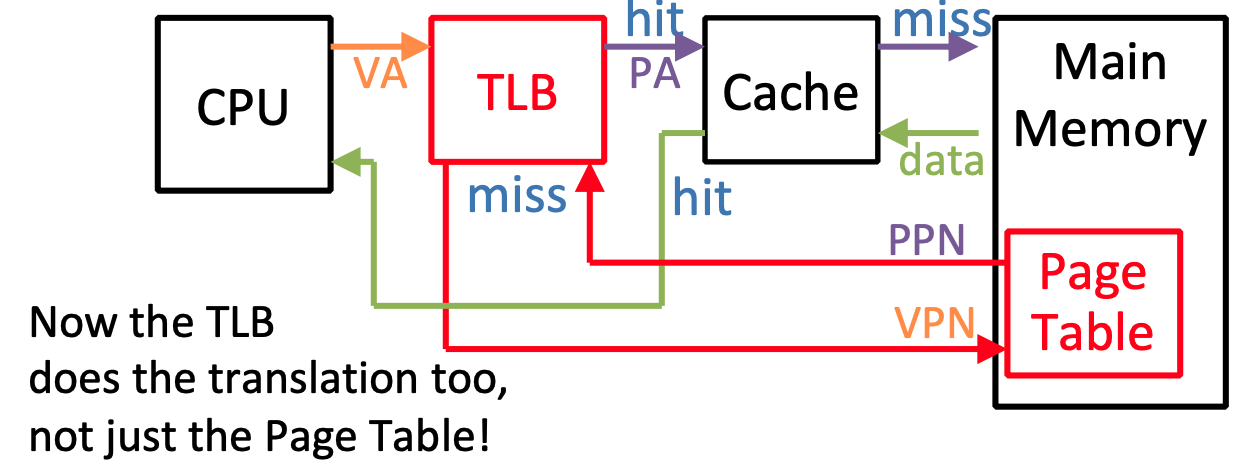

Translation Lookaside Buffer (TLB)

MMU Problem: 2+ physical memory accesses per data access = SLOW

Build a separate cache for the Page Table: TLB

TLB vs Cache

- TLBs usually small, typically 32–128 entries

- TLB access time comparable to cache (much faster than accessing main memory)

- TLBs usually are fully/highly associativity

Page Locality

- Instruction accesses spend a lot of time on the same page (since accesses sequential)

- Stack accesses have definite locality of reference

TLB Entry Format

- Valid: whether that TLB ENTRY is valid (unrelated to PT)

- Ref: Used to implement LRU, set when page is accessed, cleared periodically by OS

- Access Rights: Data from the PT

- Dirty: Consistent with PT

- PPN: Data from PT

- VPN: Data from PT

Page Replacement Policy

Basic Policy

- Random: Pick random page for every replacement

- FIFO: Replace page we miss least recently

- MIN: Replace page that will be used farthest in future

- LRU: Replace page we used(hit & miss) least recently(approximation to MIN)

How to implement LRU?

Maintain a linked list. On each use, remove page from list and place at head, LRU page is at tail

Problems: Many instructions for each hardware access

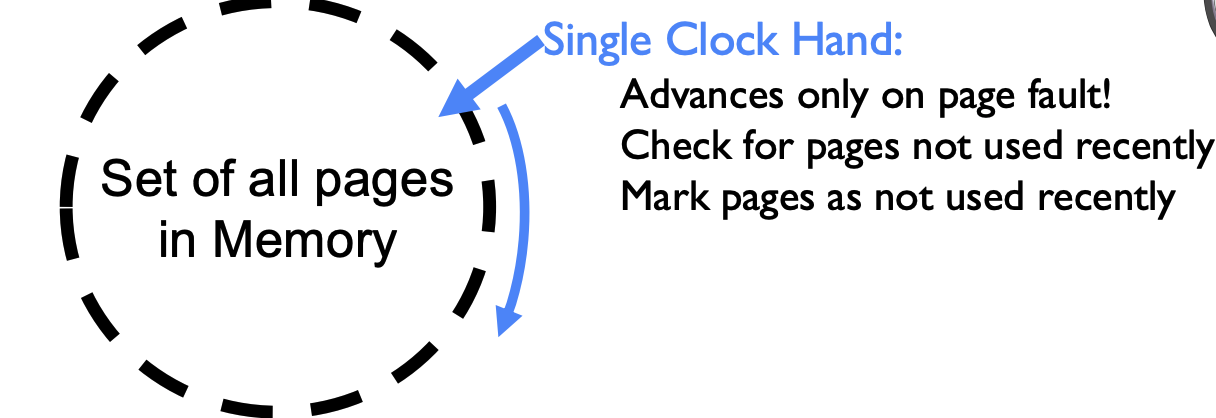

Clock Algorithm

To approximate LRU, arrange all pages in circular list. Maintain a hardware use bit per physical page

On reference: set use bit

On page fault:

- Advance clock hand

- Check use bit: 1 means used recently, clear and leave alone; The first 0 is selected candidate for replacement

Problem: Replace an old page, not the oldest page

Nth Chance Clock Algorithm

OS keeps counter per page: # sweeps

On page fault, OS checks use bit:

- 1: clear use and also clear counter (used in last sweep)

- 0: increment counter. if count=N,replace page

Large N: good approximation

Small N: more efficient

Paging Questions

Page Sharing

Senario

- Different processes running same binary

- User-level system libraries (execute only)

- Shared-memory segments between different processes

Contex Switch

Address Space just changed, so TLB entries no longer valid

Options

- Invalidate TLB: simple but might be expensive

- Include ProcessID in TLB: needs hardware

Demand Paging

(Success, TlbEntry) = TLB_Lookup(VPN)

if (Success == True) # TLB Hit

if (CanAccess(TlbEntry.ProtectBits) == True)

Register = AccessMemory(PhysAddr)

else

RaiseException(PROTECTION_FAULT) # Protection Fault

else #TLB Miss

PTEAddr = PTBR + (VPN * sizeof(PTE))

PTE = AccessMemory(PTEAddr)

if (PTE.Valid == False) # Invalid PTE

RaiseException(SEGMENTATION_FAULT)

else

if (CanAccess(PTE.ProtectBits) == False)

RaiseException(PROTECTION_FAULT)

else if (PTE.Present == True) # Retry

TLB_Insert(VPN, PTE.PFN, PTE.ProtectBits)

RetryInstruction()

else if (PTE.Present == False) # Page Fault

RaiseException(PAGE_FAULT)

Protection Fault

Page table entry has protection bits inconsistent with operation

- Read/write/execute operation not permitted on the page

Normally, OS sends segmentation fault, kills thread

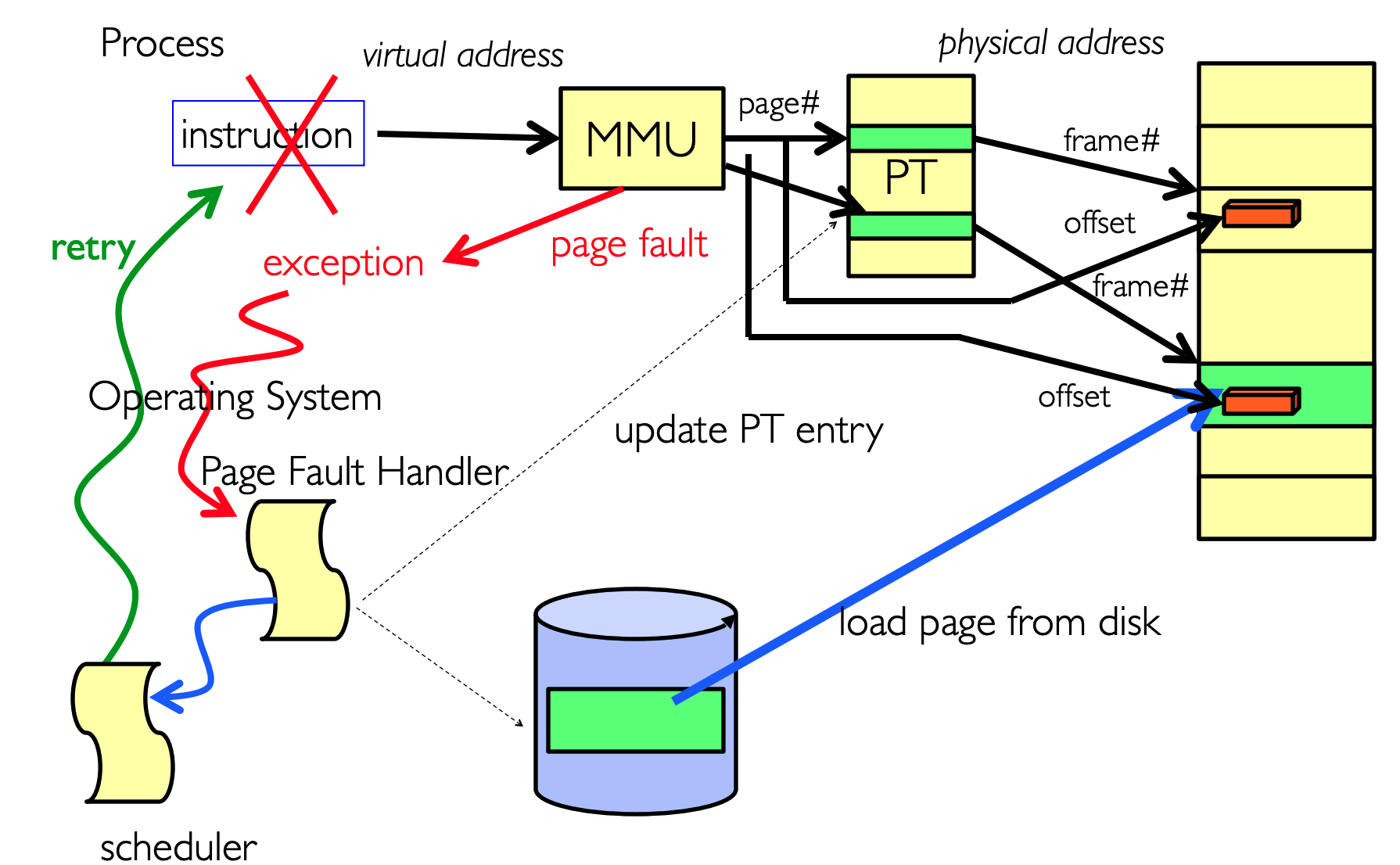

Page Fault

Suppose user references page with invalid PTE

Load the page off the disk into a free page of memory. Switch to some other process while we wait

If memory full, replace page (LRU). If old page modified, write back to disk. Change its PTE and any cached TLB to be invalid

Maps page table entry in page table to frame, makes it present

Interrupt thrown(switch to thread from original faulting location) when page loaded and the process’ page table is updated.

Continuous swapping between disk and memory called "thrashing"

Other Scenarios

Process Fork

- Create a copy of the page table

- Entries refer to parent pages – NO-WRITE

- Shared read-only pages remain shared

- Copy page on write (different stack, heap)

Exec

- Only bring in parts of the binary in active use

- Do this on demand